Idiom®

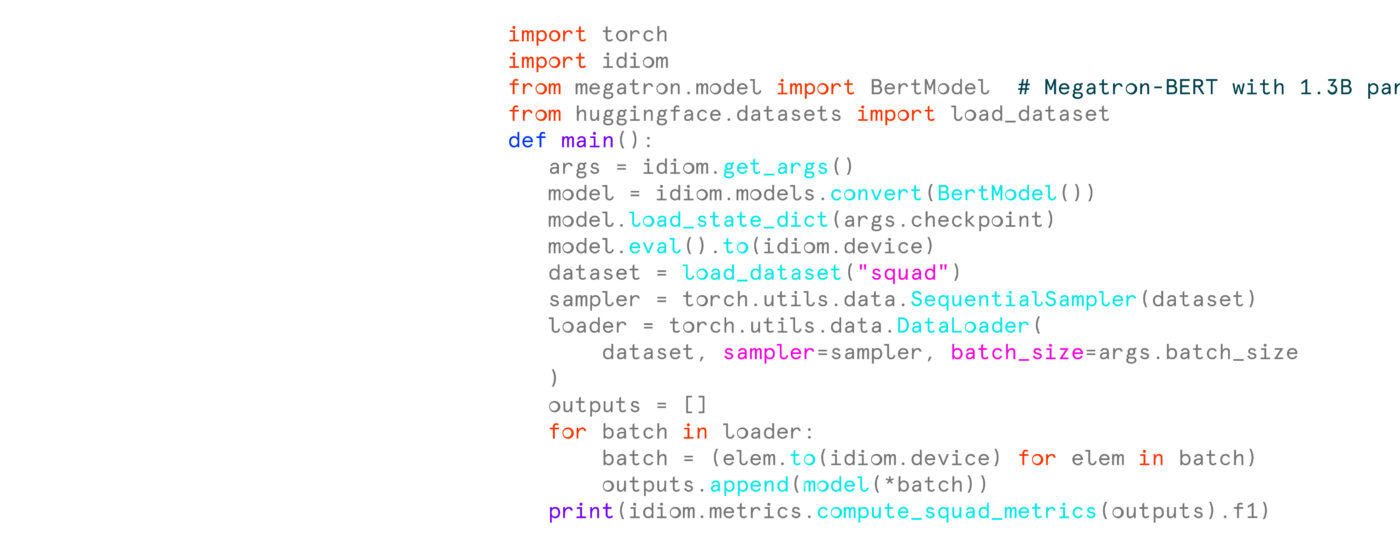

Interfacing with Deep Learning Frameworks

Idiom® interfaces with standard deep learning frameworks and model exchange formats, while providing the transformations and tools required by deep learning model authors and deployers.

Ease of use & workflow

Idiom® does the work when converting a program from your description

- User selects Envise™ as their target hardware

- No change to Pytorch, TensorFlow, or ONNX file necessary

Graph compiler

idCompile automates the programming by partitioning (large) neural networks for parallel programming within and between Envise blades

- Automatic conversion from floating-point numbers for mixed-precision inference

- Automatic generation of optimized execution schedule

- Supports multiple parallelism strategies: data parallelism, model parallelism, and pipelining

Multi-blade Envise partitioning

Idiom® automatically performs the partitioning between multiple Envise™ blades.

- Proprietary Lightmatter® fiber optical communication links Envise™ blades, while Idiom® synchronizes the Envise chips together in a single runtime

- Automatic partitioning chooses the best parallelism model for performance

- Virtualizes each Envise™ blade automatically and multiple users can apportion the number of chips used

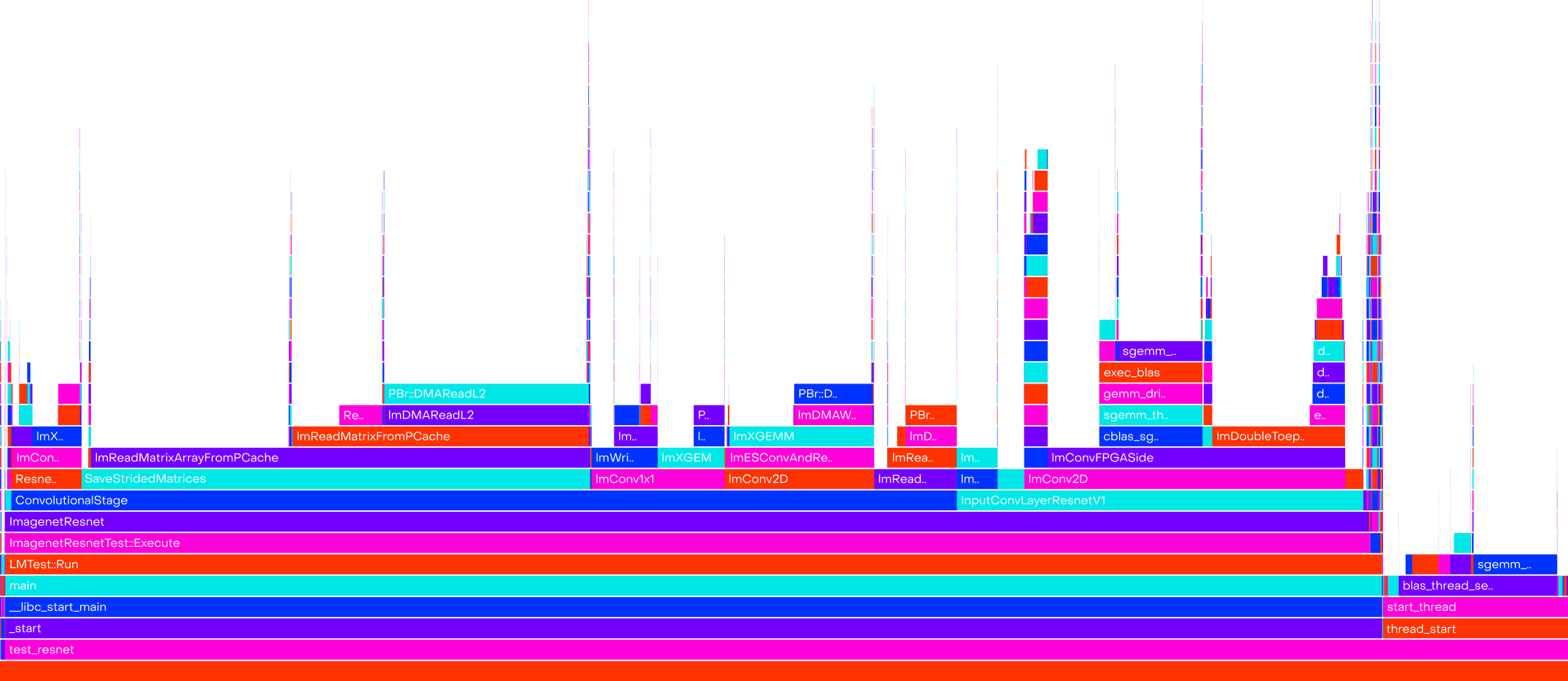

Debugging and Profiling

idProfiler provides an in-depth view of the neural network execution over multiple Envise™ devices

- Bird’s-eye view of the neural network program including memory usage

- Identifies bottlenecks and provides information for programmers to optimize their neural network model

- idBug helps locate errors within the parallel multi-chip program

Idiom® ML Libraries

idML is a complete set of machine learning tools with Pytorch front-end

- Compresses and quantizes neural networks while maintaining performance

- Advanced quantization strategies including knowledge distillation and noisy quantization-aware training

- In-depth and helpful visualization of the neural network performance with different choices of hyperparameters

- Implements any neural network—from small to large, and from image processing to recommendation models

MACHINE LEARNING

Computer vision

Natural language processing

Sentiment analysis

Machine translation

Recommendation

COMPUTING

High performance computing

Public/private cloud computing

On-premise computing

5G base station computing

- Automate the deployment of your models to Lightmatter® hardware

- Optimize your neural network model performance using the Idiom® software stack